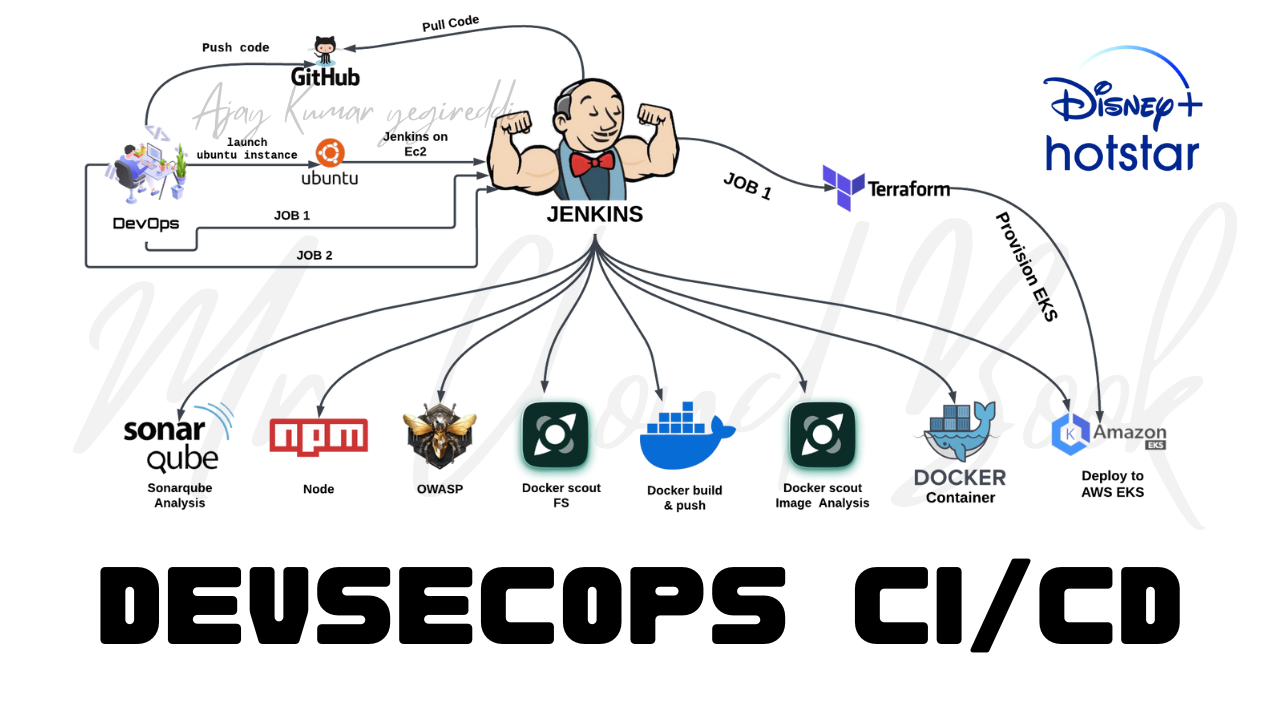

In the ever-evolving landscape of software development and deployment, the integration of robust security practices into the development pipeline has become imperative. DevSecOps, an amalgamation of Development, Security, and Operations, emphasizes the integration of security measures throughout the software development lifecycle, promoting a proactive approach to mitigate potential vulnerabilities and threats.

This blog serves as a comprehensive guide to deploying a Hotstar clone—a popular streaming platform—using the principles of DevSecOps on Amazon Web Services (AWS). The deployment process encompasses the utilization of various tools and services, including Docker, Jenkins, Java, SonarQube, AWS CLI, Kubectl, and Terraform, to automate, secure, and streamline the deployment pipeline.

The journey begins with the setup of an AWS EC2 instance configured with Ubuntu, granting it a specific IAM role to facilitate the learning process. Subsequently, an automated script is crafted to install crucial tools and dependencies required for the deployment pipeline, ensuring efficiency and consistency in the setup process.

Central to this DevSecOps approach is the orchestration through Jenkins jobs, where stages are defined to execute tasks such as creating an Amazon EKS cluster, deploying the Hotstar clone application, and implementing security measures at various stages of the deployment.

The integration of security practices is a pivotal aspect of this process. Utilizing SonarQube, OWASP, and Docker Scout, the blog will elucidate how static code analysis, security checks, and container security scans are seamlessly embedded within the pipeline. These measures fortify the application against potential vulnerabilities, ensuring a robust and secure deployment.

GITHUB : https://github.com/Aj7Ay/Hotstar-Clone.git

Prerequisites

- AWS account setup

- Basic knowledge of AWS services

- Understanding of DevSecOps principles

- Familiarity with Docker, Jenkins, Java, SonarQube, AWS CLI, Kubectl, and Terraform,Docker Scout

Step-by-Step Deployment Process

Step 1: Setting up AWS EC2 Instance

- Creating an EC2 instance with Ubuntu AMI, t2.large, and 30 GB storage

- Assigning an IAM role with Admin access for learning purposes

Step 2: Installation of Required Tools on the Instance

- Writing a script to automate the installation of:

- Docker

- Jenkins

- Java

- SonarQube container

- AWS CLI

- Kubectl

- Terraform

Step 3: Jenkins Job Configuration

- Creating Jenkins jobs for:

- Creating an EKS cluster

- Deploying the Hotstar clone application

- Configuring the Jenkins job stages:

- Sending files to SonarQube for static code analysis

- Running

npm install - Implementing OWASP for security checks

- Installing and running Docker Scout for container security

- Scanning files and Docker images with Docker Scout

- Building and pushing Docker images

- Deploying the application to the EKS cluster

Step 4: Clean-Up Process

- Removing the EKS cluster

- Deleting the IAM role

- Terminating the Ubuntu instance

STEP 1A: Setting up AWS EC2 Instance and IAM Role

- Sign in to the AWS Management Console: Access the AWS Management Console using your credentials

- Navigate to the EC2 Dashboard: Click on the “Services” menu at the top of the page and select “EC2” under the “Compute” section. This will take you to the EC2 Dashboard.

- Launch Instance: Click on the “Instances” link on the left sidebar and then click the “Launch Instance” button.

- Choose an Amazon Machine Image (AMI): In the “Step 1: Choose an Amazon Machine Image (AMI)” section:

- Select “AWS Marketplace” from the left-hand sidebar.

- Search for “Ubuntu” in the search bar and choose the desired Ubuntu AMI (e.g., Ubuntu Server 22.04 LTS).

- Click on “Select” to proceed.

- Choose an Instance Type: In the “Step 2: Choose an Instance Type” section:

- Scroll through the instance types and select “t2.large” from the list.

- Click on “Next: Configure Instance Details” at the bottom.

- Configure Instance Details: In the “Step 3: Configure Instance Details” section, you can leave most settings as default for now. However, you can configure settings like the network, subnet, IAM role, etc., according to your requirements.

- Once done, click on “Next: Add Storage.”

- Add Storage: In the “Step 4: Add Storage” section:

- You can set the size of the root volume (usually /dev/sda1) to 30 GB by specifying the desired size in the “Size (GiB)” field.

- Customize other storage settings if needed.

- Click on “Next: Add Tags” when finished.

- Add Tags (Optional): In the “Step 5: Add Tags” section, you can add tags to your instance for better identification and management. This step is optional but recommended for organizational purposes.

- Click on “Next: Configure Security Group” when done.

- Configure Security Group: In the “Step 6: Configure Security Group” section:

- Create a new security group or select an existing one.

- Ensure that at least SSH (port 22) is open for inbound traffic to allow remote access.

- You might also want to open other ports as needed for your application’s requirements.

- Click on “Review and Launch” when finished.

- Review and Launch: Review the configuration details of your instance. If everything looks good:

- Click on “Launch” to proceed.

- A pop-up will prompt you to select or create a key pair. Choose an existing key pair or create a new one.

- Finally, click on “Launch Instances.”

- Accessing the Instance: Once the instance is launched, you can connect to it using SSH. Use the private key associated with the selected key pair to connect to the instance’s public IP or DNS address.

STEP 1B: IAM ROLE

Search for IAM in the search bar of AWS and click on roles.

Click on Create Role

Select entity type as AWS service

Use case as EC2 and click on Next.

For permission policy select Administrator Access (Just for learning purpose), click Next.

Provide a Name for Role and click on Create role.

Role is created.

Now Attach this role to Ec2 instance that we created earlier, so we can provision cluster from that instance.

Go to EC2 Dashboard and select the instance.

Click on Actions –> Security –> Modify IAM role.

Select the Role that created earlier and click on Update IAM role.

Connect the instance to Mobaxtreme or Putty.

Step 2: Installation of Required Tools on the Instance

Scripts to install Required tools

sudo su #Into root

vi script1.shScript1 for Java,Jenkins,Docker

#!/bin/bash

sudo apt update -y

wget -O - https://packages.adoptium.net/artifactory/api/gpg/key/public | tee /etc/apt/keyrings/adoptium.asc

echo "deb [signed-by=/etc/apt/keyrings/adoptium.asc] https://packages.adoptium.net/artifactory/deb $(awk -F= '/^VERSION_CODENAME/{print$2}' /etc/os-release) main" | tee /etc/apt/sources.list.d/adoptium.list

sudo apt update -y

sudo apt install temurin-17-jdk -y

/usr/bin/java --version

curl -fsSL https://pkg.jenkins.io/debian-stable/jenkins.io-2023.key | sudo tee /usr/share/keyrings/jenkins-keyring.asc > /dev/null

echo deb [signed-by=/usr/share/keyrings/jenkins-keyring.asc] https://pkg.jenkins.io/debian-stable binary/ | sudo tee /etc/apt/sources.list.d/jenkins.list > /dev/null

sudo apt-get update -y

sudo apt-get install jenkins -y

sudo systemctl start jenkins

#install docker

# Add Docker's official GPG key:

sudo apt-get update

sudo apt-get install ca-certificates curl gnupg -y

sudo install -m 0755 -d /etc/apt/keyrings

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo gpg --dearmor -o /etc/apt/keyrings/docker.gpg

sudo chmod a+r /etc/apt/keyrings/docker.gpg

# Add the repository to Apt sources:

echo \

"deb [arch=$(dpkg --print-architecture) signed-by=/etc/apt/keyrings/docker.gpg] https://download.docker.com/linux/ubuntu \

$(. /etc/os-release && echo "$VERSION_CODENAME") stable" | \

sudo tee /etc/apt/sources.list.d/docker.list > /dev/null

sudo apt-get update

sudo apt-get install docker-ce docker-ce-cli containerd.io docker-buildx-plugin docker-compose-plugin -y

sudo usermod -aG docker ubuntu

newgrp dockerRun script by providing permissions

sudo chmod 777 script1.sh

sh script1.sh

Script 2 for Terraform,kubectl,Aws cli

vi script2.shScript 2

#!/bin/bash

#install terraform

sudo apt install wget -y

wget -O- https://apt.releases.hashicorp.com/gpg | sudo gpg --dearmor -o /usr/share/keyrings/hashicorp-archive-keyring.gpg

echo "deb [signed-by=/usr/share/keyrings/hashicorp-archive-keyring.gpg] https://apt.releases.hashicorp.com $(lsb_release -cs) main" | sudo tee /etc/apt/sources.list.d/hashicorp.list

sudo apt update && sudo apt install terraform

#install Kubectl on Jenkins

sudo apt update

sudo apt install curl -y

curl -LO https://dl.k8s.io/release/$(curl -L -s https://dl.k8s.io/release/stable.txt)/bin/linux/amd64/kubectl

sudo install -o root -g root -m 0755 kubectl /usr/local/bin/kubectl

kubectl version --client

#install Aws cli

curl "https://awscli.amazonaws.com/awscli-exe-linux-x86_64.zip" -o "awscliv2.zip"

sudo apt-get install unzip -y

unzip awscliv2.zip

sudo ./aws/installGive permissions

sudo chmod 777 script2.sh

sh script2.shNow Run sonarqube container

sudo chmod 777 /var/run/docker.sock

docker run -d --name sonar -p 9000:9000 sonarqube:lts-communityEc2 is created in the Aws console

Now copy the public IP address of ec2 and paste it into the browser

Ec2-ip:8080 #you will Jenkins login page

Connect your Instance to Putty or Mobaxtreme and provide the below command for the Administrator password

sudo cat /var/lib/jenkins/secrets/initialAdminPassword

Now, install the suggested plugins.

Jenkins will now get installed and install all the libraries.

Create an admin user

Click on save and continue.

Jenkins Dashboard

Now Copy the public IP again and paste it into a new tab in the browser with 9000

ec2-ip:9000 #runs sonar container

Enter username and password, click on login and change password

username admin

password admin

Update New password, This is Sonar Dashboard.

Now go to Putty and see whether it’s installed docker, Terraform, Aws cli, Kubectl or not.

docker --version

aws --version

terraform --version

kubectl version

Step 3: Jenkins Job Configuration

Step 3A: EKS Provision job

That is done now go to Jenkins and add a terraform plugin to provision the AWS EKS using the Pipeline Job.

Go to Jenkins dashboard –> Manage Jenkins –> Plugins

Available Plugins, Search for Terraform and install it.

Go to Putty and use the below command

let’s find the path to our Terraform (we will use it in the tools section of Terraform)

which terraform

Now come back to Manage Jenkins –> Tools

Add the terraform in Tools

Apply and save.

CHANGE YOUR S3 BUCKET NAME IN THE BACKEND.TF

Now create a new job for the Eks provision

I want to do this with build parameters to apply and destroy while building only.

you have to add this inside job like the below image

Let’s add a pipeline

pipeline{

agent any

stages {

stage('Checkout from Git'){

steps{

git branch: 'main', url: 'https://github.com/Aj7Ay/Hotstar-Clone.git'

}

}

stage('Terraform version'){

steps{

sh 'terraform --version'

}

}

stage('Terraform init'){

steps{

dir('EKS_TERRAFORM') {

sh 'terraform init'

}

}

}

stage('Terraform validate'){

steps{

dir('EKS_TERRAFORM') {

sh 'terraform validate'

}

}

}

stage('Terraform plan'){

steps{

dir('EKS_TERRAFORM') {

sh 'terraform plan'

}

}

}

stage('Terraform apply/destroy'){

steps{

dir('EKS_TERRAFORM') {

sh 'terraform ${action} --auto-approve'

}

}

}

}

}let’s apply and save and Build with parameters and select action as apply

Stage view it will take max 10mins to provision

Check in Your Aws console whether it created EKS or not.

Ec2 instance is created for the Node group

Step 3B: Hotstar job

Plugins installation & setup (Java, Sonar, Nodejs, owasp, Docker)

Go to Jenkins dashboard

Manage Jenkins –> Plugins –> Available Plugins

Search for the Below Plugins

Eclipse Temurin installer

Sonarqube Scanner

NodeJs

Owasp Dependency-Check

Docker

Docker Commons

Docker Pipeline

Docker API

Docker-build-step

Configure in Global Tool Configuration

Goto Manage Jenkins → Tools → Install JDK(17) and NodeJs(16)→ Click on Apply and Save

NOTE: USE ONLY NODE JS 16

For Sonarqube use the latest version

For Owasp use the 9.0.7 version

Use the latest version of Docker

Click apply and save.

Configure Sonar Server in Manage Jenkins

Grab the Public IP Address of your EC2 Instance, Sonarqube works on Port 9000, so <Public IP>:9000. Goto your Sonarqube Server. Click on Administration → Security → Users → Click on Tokens and Update Token → Give it a name → and click on Generate Token

click on update Token

Create a token with a name and generate

copy Token

Goto Jenkins Dashboard → Manage Jenkins → Credentials → Add Secret Text. It should look like this

You will this page once you click on create

Now, go to Dashboard → Manage Jenkins → System and Add like the below image.

Click on Apply and Save

In the Sonarqube Dashboard add a quality gate also

Administration–> Configuration–>Webhooks

Click on Create

Add details

#in url section of quality gate

http://jenkins-public-ip:8080>/sonarqube-webhook/

Now add Docker credentials to the Jenkins to log in and push the image

Manage Jenkins –> Credentials –> global –> add credential

Add DockerHub Username and Password under Global Credentials

Create.

Pipeline upto Docker

Now let’s create a new job for our pipeline

Before Adding pipeline install Docker Scout

docker login #use credentials to login

curl -sSfL https://raw.githubusercontent.com/docker/scout-cli/main/install.sh | sh -s -- -b /usr/local/binAdd this to Pipeline

pipeline{

agent any

tools{

jdk 'jdk17'

nodejs 'node16'

}

environment {

SCANNER_HOME=tool 'sonar-scanner'

}

stages {

stage('clean workspace'){

steps{

cleanWs()

}

}

stage('Checkout from Git'){

steps{

git branch: 'main', url: 'https://github.com/Aj7Ay/Hotstar-Clone.git'

}

}

stage("Sonarqube Analysis "){

steps{

withSonarQubeEnv('sonar-server') {

sh ''' $SCANNER_HOME/bin/sonar-scanner -Dsonar.projectName=Hotstar \

-Dsonar.projectKey=Hotstar'''

}

}

}

stage("quality gate"){

steps {

script {

waitForQualityGate abortPipeline: false, credentialsId: 'Sonar-token'

}

}

}

stage('Install Dependencies') {

steps {

sh "npm install"

}

}

stage('OWASP FS SCAN') {

steps {

dependencyCheck additionalArguments: '--scan ./ --disableYarnAudit --disableNodeAudit', odcInstallation: 'DP-Check'

dependencyCheckPublisher pattern: '**/dependency-check-report.xml'

}

}

stage('Docker Scout FS') {

steps {

script{

withDockerRegistry(credentialsId: 'docker', toolName: 'docker'){

sh 'docker-scout quickview fs://.'

sh 'docker-scout cves fs://.'

}

}

}

}

stage("Docker Build & Push"){

steps{

script{

withDockerRegistry(credentialsId: 'docker', toolName: 'docker'){

sh "docker build -t hotstar ."

sh "docker tag hotstar sevenajay/hotstar:latest "

sh "docker push sevenajay/hotstar:latest"

}

}

}

}

stage('Docker Scout Image') {

steps {

script{

withDockerRegistry(credentialsId: 'docker', toolName: 'docker'){

sh 'docker-scout quickview sevenajay/hotstar:latest'

sh 'docker-scout cves sevenajay/hotstar:latest'

sh 'docker-scout recommendations sevenajay/hotstar:latest'

}

}

}

}

stage("deploy_docker"){

steps{

sh "docker run -d --name hotstar -p 3000:3000 sevenajay/hotstar:latest"

}

}

}

}Click on Apply and save.

Build now

Stage view

To see the report, you can go to Sonarqube Server and go to Projects.

You can see the report has been generated and the status shows as passed. You can see that there are 854 lines it scanned. To see a detailed report, you can go to issues.

OWASP, You will see that in status, a graph will also be generated and Vulnerabilities.

Let’s See Docker Scout File scan report

When you log in to Dockerhub, you will see a new image is created

Let’s See Docker Scout Image analysis

Quickview

Cves

Recommendations

Deploy to Container

ec2-ip:3000Output

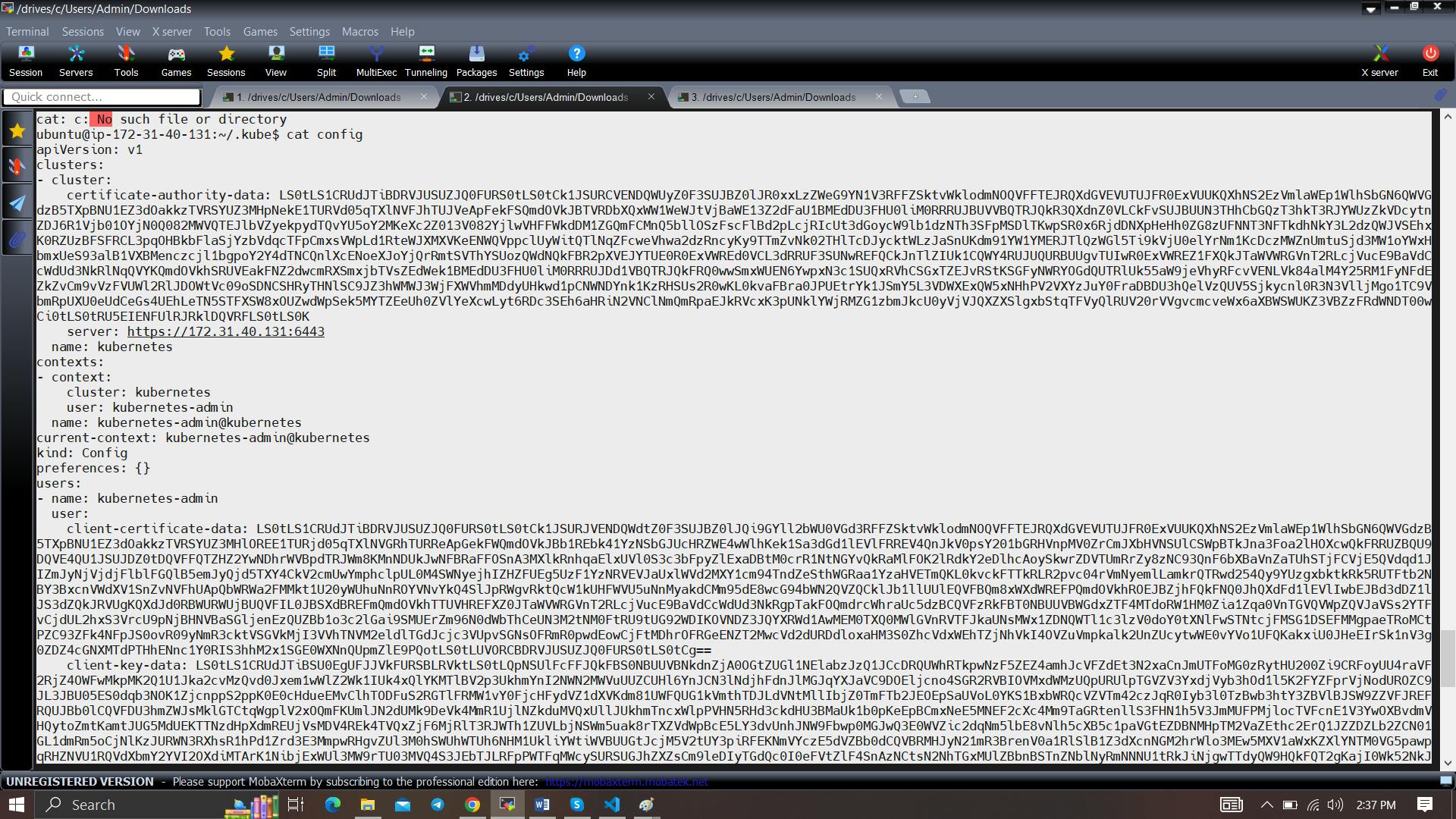

Go to Putty of your Jenkins instance SSH and enter the below command

aws eks update-kubeconfig --name CLUSTER NAME --region CLUSTER REGION

aws eks update-kubeconfig --name EKS_CLOUD --region ap-south-1

Let’s see the nodes

kubectl get nodes

Now Give this command in CLI

cat /root/.kube/configCopy the config file to Jenkins master or the local file manager and save it

copy it and save it in documents or another folder save it as secret-file.txt

Note: create a secret-file.txt in your file explorer save the config in it and use this at the kubernetes credential section.

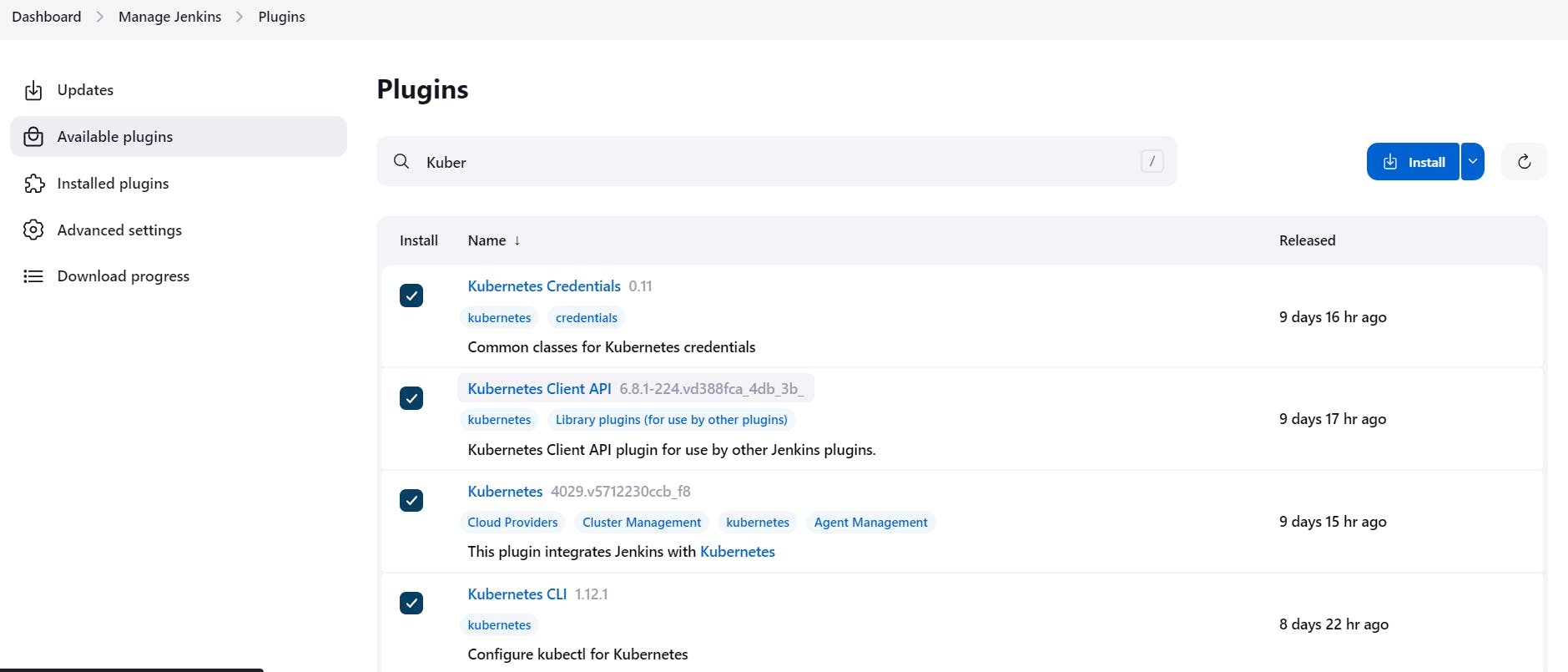

Install Kubernetes Plugin, Once it’s installed successfully

goto manage Jenkins –> manage credentials –> Click on Jenkins global –> add credentials

final step to deploy on the Kubernetes cluster

stage('Deploy to kubernets'){

steps{

script{

dir('K8S') {

withKubeConfig(caCertificate: '', clusterName: '', contextName: '', credentialsId: 'k8s', namespace: '', restrictKubeConfigAccess: false, serverUrl: '') {

sh 'kubectl apply -f deployment.yml'

sh 'kubectl apply -f service.yml'

}

}

}

}

}Give the command after pipeline success

kubectl get all

Add Load balancer IP address to cluster ec2 instance security group and copy load balancer Link and open in a browser

You will see output like this.

Step 4: Destruction

Now Go to Jenkins Dashboard and click on Terraform-Eks job

And build with parameters and destroy action

It will delete the EKS cluster that provisioned

After 10 minutes cluster will delete and wait for it. Don’t remove ec2 instance till that time.

Cluster deleted

Delete the Ec2 instance & IAM role.

Check the load balancer also if it is deleted or not.

Congratulations on completing the journey of deploying your Hotstar clone using DevSecOps practices on AWS! This process has highlighted the power of integrating security measures seamlessly into the deployment pipeline, ensuring not only efficiency but also a robust shield against potential threats.

Key Highlights:

- Leveraging AWS services, Docker, Jenkins, and security tools, we orchestrated a secure and automated deployment pipeline.

- Implementing DevSecOps principles helped fortify the application against vulnerabilities through continuous security checks.

- The seamless integration of static code analysis, container security, and automated deployment showcases the strength of DevSecOps methodologies.

What’s Next? Continue exploring the realm of DevSecOps and cloud technologies. Experiment with new tools, refine your deployment pipelines, and delve deeper into securing your applications effectively.

Stay Connected: We hope this guide has been insightful and valuable for your learning journey. Don’t hesitate to reach out with questions, feedback, or requests for further topics. Follow us on [Your Social Media Handles] for more tech tutorials, guides, and updates.

Thank you for embarking on this DevSecOps journey with us. Keep innovating, securing, and deploying your applications with confidence!

Leave a Reply