In today’s rapidly evolving technological landscape, deploying and managing complex applications efficiently and securely is paramount. This comprehensive guide explores the deployment of a two-tier Flask application with MySQL, orchestrated through Jenkins CI/CD pipelines, and hosted on Amazon Elastic Kubernetes Service (EKS). Moreover, it delves into monitoring this infrastructure using Prometheus and Grafana, alongside data storage facilitated by InfluxDB.

Two-Tier Flask Application with MySQL:

- The two-tier architecture comprises a frontend Flask application serving user requests and a backend MySQL database storing application data.

- Flask provides a lightweight and flexible framework for building web applications, while MySQL offers a reliable and scalable relational database management system.

Prerequisites:

- AWS Account: To get started, you’ll need an active AWS account. Ensure that you have access and permission to create and manage AWS resources.

- AWS CLI: Install the AWS Command Line Interface (CLI) on your local machine and configure it with your AWS credentials. This is essential for managing your AWS resources.

- IAM User and Key Pair: Create an IAM (Identity and Access Management) user with the necessary permissions to provision resources on AWS. Additionally, generate an IAM Access Key and Secret Access Key for programmatic access. Ensure that you securely manage these credentials.

- S3 Bucket: Set up an S3 bucket to store your Terraform state files. This bucket is crucial for maintaining the state of your infrastructure and enabling collaboration.

- Terraform: Install Terraform on your local machine. Terraform is used for provisioning infrastructure as code and managing AWS resources. Make sure to configure Terraform to work with your AWS credentials and your S3 bucket for state storage.

Step1: How to install and setup Terraform on Windows

Download Terraform:

Visit the official Terraform website: terraform.io/downloads.html

Extract the ZIP Archive:

Once the download is complete, extract the contents of the ZIP archive to a directory on your computer. You can use a tool like 7-Zip or the built-in Windows extraction tool. Ensure that you extract it to a directory that’s part of your system’s PATH.

Remember that I created a Terraform Directory in C drive

Extracted to C drive

Copy the path

Add Terraform to Your System’s PATH:

To make Terraform easily accessible from the command prompt, add the directory where Terraform is extracted to your system’s PATH environment variable. Follow these steps:

Search for “Environment Variables” in your Windows search bar and click “Edit the system environment variables.”

In the “System Properties” window, click the “Environment Variables” button.

Under “User variables for Admin,” find the “Path” variable and click “Edit.”

Click on New paste the copied path and click on OK

Under “System variables,” find the “Path” variable and click “Edit.”

Click “New” and add the path to the directory where you extracted Terraform (e.g., C:\path\to\terraform).

Click “OK” to close the Environment Variables windows.

Click “OK” again to close the System Properties window.

Verify the Installation:

Open a new Command Prompt or PowerShell window.

Type terraform –version and press Enter. This command should display the Terraform version, confirming that Terraform is installed and in your PATH.

Your Terraform installation is now complete, and you can start using Terraform to manage your infrastructure as code.

MacOS Terraform Install

brew install terraform

Step2: Download the AWS CLI Installer:

Visit the AWS CLI Downloads page: aws.amazon.com/cli

Under “Install the AWS CLI,” click on the “64-bit” link to download the AWS CLI installer for Windows.

Run the Installer:

Locate the downloaded installer executable (e.g., AWSCLIV2.exe) and double-click it to run the installer.

Click on Next

Agree to the terms and click on Next

Click Next

Click on install

Click Finish Aws cli is installed

Verify the Installation:

Open a Command Prompt or PowerShell window.

Type aws –version and press Enter. This command should display the AWS CLI version, confirming that the installation was successful.

MacOS AWS-CLI install

brew install awscli

Step3: create an IAM user

Navigate to the AWS console

Click the “Search” field.

Search for IAM

Click “Users”

Click “Add users”

Click the “User name” field.

Type “Terraform” or as you wish about the name

Click Next

Click “Attach policies directly”

Click this checkbox with Administrator access

Click “Next”

Click “Create user”

Click newly created user in my case Ajay

Click “Security credentials”

Click “Create access key”

Click this radio button with the CLI

Agree to terms

Click Next

Click “Create access key”

Download .csv file

Step4: Aws Configure

Go to vs code or Cmd your wish

aws configure

Provide your Aws Access key and Secret Access key

Step5: Terraform files and Provision

Change into Two-tier-flask-app and Jenkins-terraform

git clone https://github.com/Aj7Ay/two-tier-flask-app.git

cd two-tier-flask-app

cd Jenksin-terraform

In the backend.tf file please Update Your S3 bucket and change Key path to your name

terraform {

backend "s3" {

bucket = "" # Replace with your actual S3 bucket name

key = "/terraform.tfstate"

region = "ap-south-1"

}

}

Now create the EC2 instance it will take care of creating Server creation and complete tool installation

terraform init

terraform validate

terraform plan

terraform apply

Here is full script that takes care of installing required tools

#!/bin/bash

exec > >(tee -i /var/log/user-data.log)

exec 2>&1

sudo apt update -y

sudo apt install software-properties-common

sudo add-apt-repository --yes --update ppa:ansible/ansible

sudo apt install ansible -y

sudo apt install git -y

mkdir Ansible && cd Ansible

pwd

git clone https://github.com/Aj7Ay/ANSIBLE.git

cd ANSIBLE

ansible-playbook -i localhost DevSecOps.yml

What DevSecOps.yml file contains

---

- name: Install DevOps Tools

hosts: localhost

become: yes

tasks:

- name: Update apt cache

apt:

update_cache: yes

- name: Install common packages

apt:

name:

- gnupg

- software-properties-common

- apt-transport-https

- ca-certificates

- curl

- wget

- unzip

state: present

# Jenkins installation

- name: Update all packages to their latest version

apt:

name: "*"

state: latest

- name: Download Jenkins key

ansible.builtin.get_url:

url: https://pkg.jenkins.io/debian-stable/jenkins.io-2023.key

dest: /usr/share/keyrings/jenkins-keyring.asc

- name: Add Jenkins repo

ansible.builtin.apt_repository:

repo: deb [signed-by=/usr/share/keyrings/jenkins-keyring.asc] https://pkg.jenkins.io/debian-stable binary/

state: present

filename: jenkins.list

- name: Install fontconfig

ansible.builtin.apt:

name: fontconfig

state: present

- name: Install Java

ansible.builtin.apt:

name: openjdk-17-jre

state: present

- name: Install Jenkins

ansible.builtin.apt:

name: jenkins

state: present

- name: Ensure Jenkins service is running

ansible.builtin.systemd:

name: jenkins

state: started

enabled: yes

# HashiCorp (Terraform) setup

- name: Add HashiCorp GPG key

ansible.builtin.get_url:

url: https://apt.releases.hashicorp.com/gpg

dest: /usr/share/keyrings/hashicorp-archive-keyring.asc

mode: '0644'

- name: Add HashiCorp repository

ansible.builtin.apt_repository:

repo: "deb [signed-by=/usr/share/keyrings/hashicorp-archive-keyring.asc] https://apt.releases.hashicorp.com {{ ansible_distribution_release }} main"

state: present

filename: hashicorp

update_cache: yes

- name: Install Terraform

ansible.builtin.apt:

name: terraform

state: present

update_cache: yes

# Snyk installation

- name: Download and install Snyk

ansible.builtin.get_url:

url: https://static.snyk.io/cli/latest/snyk-linux

dest: /usr/local/bin/snyk

mode: '0755'

# Helm installation

- name: Download Helm installation script

ansible.builtin.get_url:

url: https://raw.githubusercontent.com/helm/helm/main/scripts/get-helm-3

dest: /tmp/get_helm.sh

mode: '0700'

- name: Install Helm

ansible.builtin.command: /tmp/get_helm.sh

# Kubernetes tools installation

- name: Create Kubernetes directory

ansible.builtin.file:

path: /usr/local/bin

state: directory

mode: '0755'

- name: Download kubectl

ansible.builtin.get_url:

url: https://dl.k8s.io/release/v1.28.0/bin/linux/amd64/kubectl

dest: /usr/local/bin/kubectl

mode: '0755'

- name: Download kubeadm

ansible.builtin.get_url:

url: https://dl.k8s.io/release/v1.28.0/bin/linux/amd64/kubeadm

dest: /usr/local/bin/kubeadm

mode: '0755'

- name: Download kubelet

ansible.builtin.get_url:

url: https://dl.k8s.io/release/v1.28.0/bin/linux/amd64/kubelet

dest: /usr/local/bin/kubelet

mode: '0755'

- name: Verify kubectl installation

ansible.builtin.command: kubectl version --client

register: kubectl_version

changed_when: false

- name: Verify kubeadm installation

ansible.builtin.command: kubeadm version

register: kubeadm_version

changed_when: false

- name: Display Kubernetes tools versions

ansible.builtin.debug:

var: item

loop:

- "{{ kubectl_version.stdout }}"

- "{{ kubeadm_version.stdout }}"

- name: Download kubelet service file

ansible.builtin.get_url:

url: https://raw.githubusercontent.com/kubernetes/release/v0.4.0/cmd/kubepkg/templates/latest/deb/kubelet/lib/systemd/system/kubelet.service

dest: /etc/systemd/system/kubelet.service

mode: '0644'

- name: Create kubelet service directory

ansible.builtin.file:

path: /etc/systemd/system/kubelet.service.d

state: directory

mode: '0755'

- name: Download kubelet service config

ansible.builtin.get_url:

url: https://raw.githubusercontent.com/kubernetes/release/v0.4.0/cmd/kubepkg/templates/latest/deb/kubeadm/10-kubeadm.conf

dest: /etc/systemd/system/kubelet.service.d/10-kubeadm.conf

mode: '0644'

- name: Enable and start kubelet service

ansible.builtin.systemd:

name: kubelet

state: started

enabled: yes

daemon_reload: yes

# Docker installation

- name: Add Docker GPG apt Key

ansible.builtin.apt_key:

url: https://download.docker.com/linux/ubuntu/gpg

state: present

- name: Add Docker Repository

ansible.builtin.apt_repository:

repo: "deb [arch=amd64] https://download.docker.com/linux/ubuntu {{ ansible_distribution_release }} stable"

state: present

filename: docker

- name: Update apt and install docker-ce

ansible.builtin.apt:

name:

- docker-ce

- docker-ce-cli

- containerd.io

state: present

update_cache: yes

- name: Add user to docker group

ansible.builtin.user:

name: ubuntu

groups: docker

append: yes

- name: Set permissions for Docker socket

ansible.builtin.file:

path: /var/run/docker.sock

mode: '0777'

- name: Restart Docker service

ansible.builtin.systemd:

name: docker

state: restarted

daemon_reload: yes

# AWS CLI installation

- name: Check if AWS CLI is installed

ansible.builtin.command: aws --version

register: awscli_version

ignore_errors: yes

changed_when: false

- name: Download AWS CLI installer

ansible.builtin.unarchive:

src: https://awscli.amazonaws.com/awscli-exe-linux-x86_64.zip

dest: /tmp

remote_src: yes

when: awscli_version.rc != 0

- name: Install AWS CLI

ansible.builtin.command: /tmp/aws/install

when: awscli_version.rc != 0

- name: Clean up AWS CLI installer

ansible.builtin.file:

path: /tmp/aws

state: absent

# Trivy installation

- name: Download Trivy GPG key

ansible.builtin.get_url:

url: https://aquasecurity.github.io/trivy-repo/deb/public.key

dest: /tmp/trivy.key

mode: '0644'

- name: Add Trivy GPG key

ansible.builtin.shell: |

gpg --dearmor /dev/null

- name: Add Trivy repository

ansible.builtin.shell: |

echo "deb [signed-by=/usr/share/keyrings/trivy.gpg] https://aquasecurity.github.io/trivy-repo/deb $(lsb_release -cs) main" | sudo tee -a /etc/apt/sources.list.d/trivy.list

- name: Update apt cache

ansible.builtin.apt:

update_cache: yes

- name: Install Trivy

ansible.builtin.apt:

name: trivy

state: present

This will install Jenkins,Docker, AWS-CLI,Trivy,Snyk,Kubectl

Apply completed you can see Ec2 is created in the Aws console

Now copy the public IP address of ec2 and paste it into the browser

Ec2-ip:8080 #you will Jenkins login page

Connect your Instance to Putty or Mobaxtreme and provide the below command for the Administrator password

sudo cat /var/lib/jenkins/secrets/initialAdminPassword

Now, install the suggested plugins.

Jenkins will now get installed and install all the libraries.

Create an admin user

Click on save and continue.

Jenkins Dashboard

Create Sonarqube container

docker run -d --name sonar -p 9000:9000 sonarqube:lts-community

Now Copy the public IP again and paste it into a new tab in the browser with 9000

ec2-ip:9000 #runs sonar container

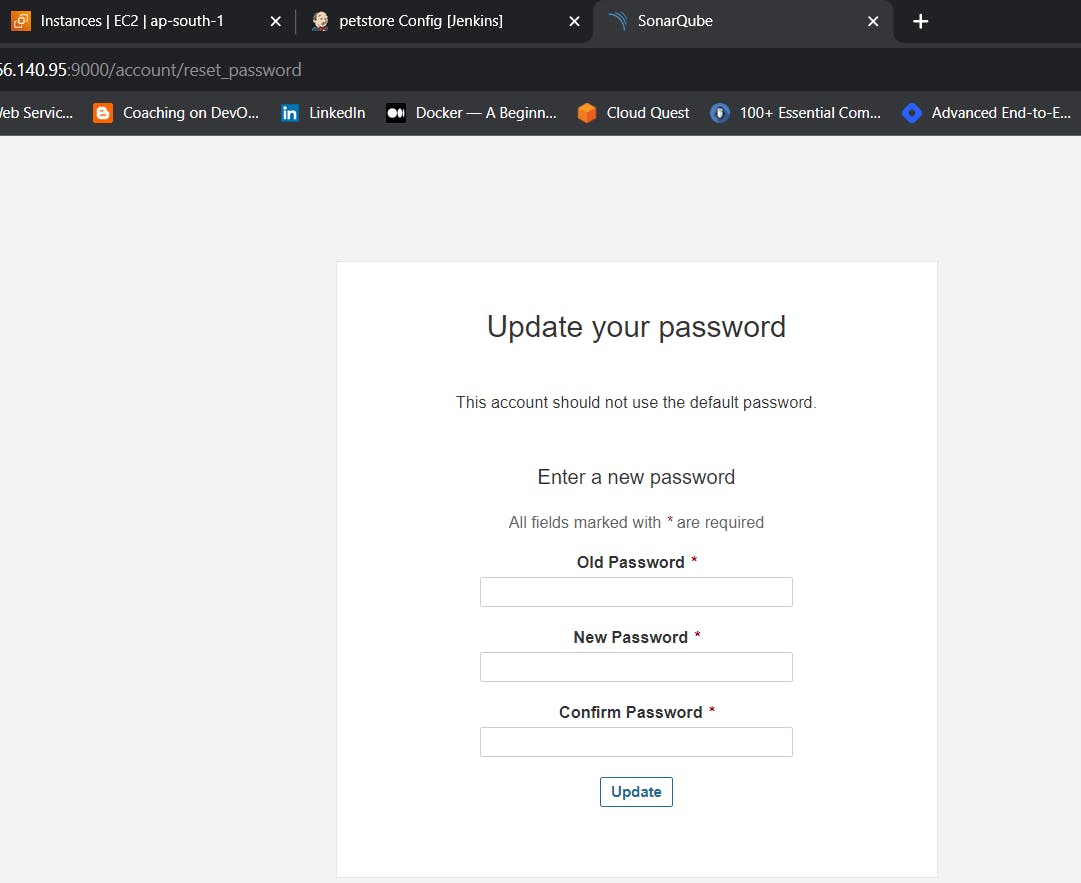

Enter username and password, click on login and change password

username admin

password admin

Update New password, This is Sonar Dashboard.

Now go to Putty and see whether it’s installed Trivy, Terraform, Aws cli, Kubectl or not.

Create an Snyk account

→ Link to create account on snyk

Click on Google to create Account or your wish to select options

→ Select Your mail

→ You will see Dashboard of snyk like this

Copy the snyk auth token from snyk dashboard

Click on account Name at bottom rightside

→ Select Account settings

Copy the token from Account

→ Go to Jenkins Dashboard

→ Manage Jenkins → Credentials → Add credential

→ select Kind as secret text and save with id as snyk

Lets Add a new Pipeline

→ Now Add Your Terraform files to Provision EKS Cluster (Create separate Folder for Terraform files)

STEP : Create EKS-CLUSTER from the Jenkins

CHANGE YOUR S3 BUCKET NAME IN THE BACKEND.TF

Now create a new job for the Eks provision

I want to do this with build parameters to apply and destroy while building only.

you have to add this inside job like the below image

Let’s add a pipeline

pipeline {

agent any

stages {

stage(‘Checkout from Git’) {

steps {

git branch: ‘main’, url: ‘github-repo’

}

}

stage(‘Terraform version’) {

steps {

sh ‘terraform –version’

}

}

stage(‘Terraform init’) {

steps {

dir(‘Eks-terraform’) {

withCredentials([string(credentialsId: ‘snyk’, variable: ‘snyk’)]) {

sh ‘snyk auth $snyk’

sh ‘terraform init && snyk iac test –report || true’

}

}

}

}

stage(‘Terraform validate’) {

steps {

dir(‘Eks-terraform’) {

sh ‘terraform validate’

}

}

}

stage(‘Terraform plan’){

steps{

dir(‘Eks-terraform’) {

sh ‘terraform plan’

}

}

}

stage(‘Terraform apply/destroy’){

steps{

dir(‘Eks-terraform’) {

script {

sh ‘terraform ${action} –auto-approve’

}

}

}

}

}

}

Let’s apply and save and Build with parameters and select action as apply

Stage view it will take max 10mins to provision

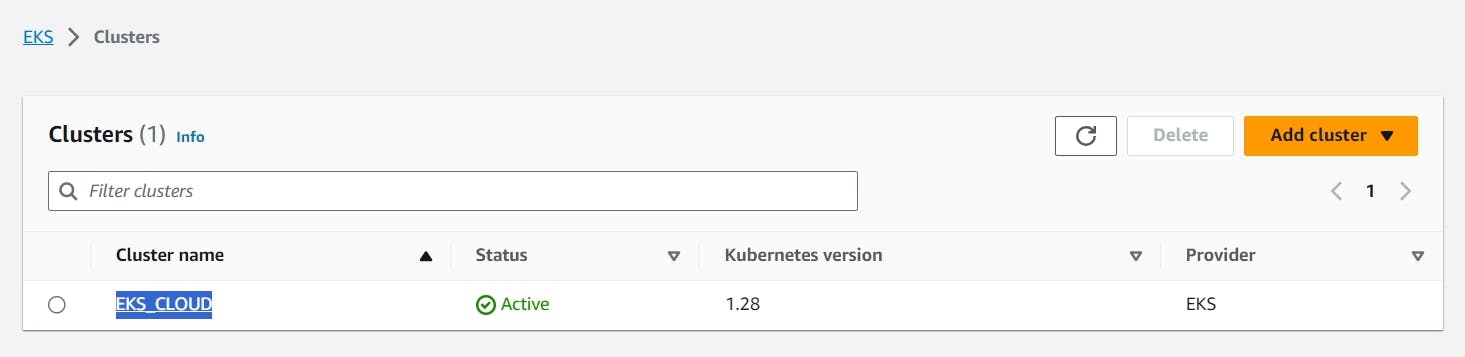

Check in Your Aws console whether it created EKS or not.

Ec2 instance is created for the Node group

Add Email Notification

Install Email Extension Plugin in Jenkins

Go to your Gmail and click on your profile

Then click on Manage Your Google Account –> click on the security tab on the left side panel you will get this page(provide mail password).

2-step verification should be enabled.

Search for the app in the search bar you will get app passwords like the below image

Click on other and provide your name and click on Generate and copy the password

In the new update, you will get a password like this

Once the plugin is installed in Jenkins, click on manage Jenkins –> configure system there under the E-mail Notification section configure the details as shown in the below image

Click on Apply and save.

Click on Manage Jenkins–> credentials and add your mail username and generated password

This is to just verify the mail configuration

Now under the Extended E-mail Notification section configure the details as shown in the below images

Click on Apply and save.

Update Kubernetes configuration on Jenkins server

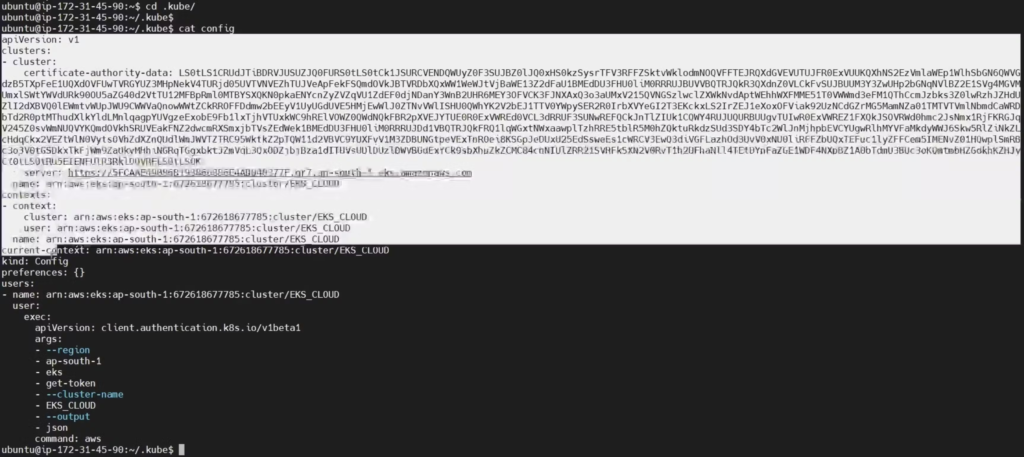

aws eks update-kubeconfig –name CLUSTER NAME –region CLUSTER REGION aws eks update-kubeconfig –name EKS_CLOUD –region ap-south-1

STEP : Install Monitoring

Add the Helm repository for the stable Helm charts using the command

helm repo add stable https://charts.helm.sh/stable

Add the Prometheus community Helm repository using the command

helm repo add prometheus-community https://prometheus-community.github.io/helm-charts

Create a Kubernetes namespace called “Prometheus” using the command

kubectl create namespace prometheus

Kube Prometheus Stack Helm chart from the Prometheus community repository into the “prometheus” namespace using the command

helm install stable prometheus-community/kube-prometheus-stack -n prometheus

Check the pods and services created in the “prometheus” namespace using the following commands

kubectl get pods -n prometheus

kubectl get svc -n prometheus

Edit the “stable-kube-prometheus-sta-prometheus” and “stable-grafana” services in the “prometheus” namespace using the commands for Loadbalancer

#try one by one

kubectl edit svc stable-kube-prometheus-sta-prometheus -n prometheus

kubectl edit svc stable-grafana -n prometheus

Check the services in the “prometheus” namespace again using the command

kubectl get svc -n prometheus

Copy the Loadbalancer URL of Grafana from services Use these to login Grfana

UserName: admin

Password: prom-operator

Step : Updating Monitoring stack

Add prometheus.yml to server

my global config

global: scrape_interval: 15s # Set the scrape interval to every 15 seconds. Default is every 1 minute. evaluation_interval: 15s # Evaluate rules every 15 seconds. The default is every 1 minute. # scrape_timeout is set to the global default (10s).

Alertmanager configuration

alerting: alertmanagers: - static_configs: - targets: # - alertmanager:9093

Load rules once and periodically evaluate them according to the global ‘evaluation_interval’.

rule_files: # - “first_rules.yml” # - “second_rules.yml”

A scrape configuration containing exactly one endpoint to scrape:

Here it’s Prometheus itself.

scrape_configs:

# The job name is added as a label job=<job_name> to any timeseries scraped from this config.

- job_name: “prometheus”

# metrics_path defaults to '/metrics'

# scheme defaults to 'http'.

static_configs:

- targets: ["localhost:9090"]

- job_name: “jenkins”

metrics_path: “/prometheus”

static_configs:

- targets: [“

:8080”]

- targets: [“

Start container with below command

docker run -d --name prometheus-container -v /home/ubuntu/prometheus.yml:/etc/prometheus/prometheus.yml -e TZ=UTC -p 9090:9090 ubuntu/prometheus:2.50.0-22.04_stable

Influx DB container

docker run -d -p 8086:8086 –name influxdb2 influxdb:1.8.6-alpine

Connect to container and execute below Influx Commands

docker exec -it influxdb2 bash influx CREATE DATABASE “jenkins” WITH DURATION 1825d REPLICATION 1 NAME “jenkins-retention” SHOW DATABASES USE database_name

Queries

Here is Repo for Influx-json file

https://github.com/Aj7Ay/EKS-OBSERVABILITY/tree/main

Jenkins health –> use prometheus data source

up{instance="jenkins-ip:8080", job="jenkins"}

Jenkins executor | node | queue count –> use prometheus data source

jenkins_executor_count_value

jenkins_node_count_value

jenkins_queue_size_value

Jenkins over all –> influxDB

SELECT count(build_number) FROM “jenkins_data” WHERE (“build_result” = ‘SUCCESS’ OR “build_result” = ‘CompletedSuccess’)

SELECT count(build_number) FROM “jenkins_data” WHERE (“build_result” = ‘FAILURE’ OR “build_result” = ‘CompletedError’ )

SELECT count(build_number) FROM “jenkins_data” WHERE (“build_result” = ‘ABORTED’ OR “build_result” = ‘Aborted’ )

SELECT count(build_number) FROM “jenkins_data” WHERE (“build_result” = ‘UNSTABLE’ OR “build_result” = ‘Unstable’ )

Pipelines ran in last 10 minutes –> influx DB

SELECT count(project_name) FROM jenkins_data WHERE time >= now() - 10m

Total Build –> influx DB

SELECT count(build_number) FROM "jenkins_data"

Last Build status –> influx Db

SELECT build_result FROM "jenkins_data" WHERE $timeFilter ORDER BY time DESC LIMIT 1

Average time –> Influx-DB

select build_time/1000 FROM jenkins_data WHERE $timeFilter

last 10 build details –> Influx-DB

SELECT "build_exec_time", "project_name", "build_number", "build_causer", "build_time", "build_result" FROM "jenkins_data" WHERE $timeFilter ORDER BY time DESC LIMIT 10

pipeline {

agent any

stages {

stage('Hello World') {

steps {

script {

echo 'Hello World!'

// Intentionally mark the build as unstable

currentBuild.result = 'UNSTABLE'

}

}

}

}

}

Install Plugins like JDK, Sonarqube Scanner, Docker,K8S

Goto Manage Jenkins →Plugins → Available Plugins →

Install below plugins

Pipeline view

→ Eclipse Temurin Installer

→ SonarQube Scanner

→ Docker

→ Docker commons

→ Docker pipeline

→ Docker API

→ Docker Build step

→ Kubernetes

→ Kubernetes CLI

→ Kubernetes Client API

→ Kubernetes Pipeline DevOps steps

Configure Java and Nodejs in Global Tool Configuration

Goto Manage Jenkins → Tools → Install JDK(17) → Click on Apply and Save

Grab the Public IP Address of your EC2 Instance, Sonarqube works on Port 9000, so

Click on Administration → Security → Users → Click on Tokens and Update Token → Give it a name → and click on Generate Token

click on update Token

Create a token with a name and generate

copy Token

Go to Jenkins Dashboard → Manage Jenkins → Credentials → Add Secret Text. It should look like this

You will this page once you click on create

Now, go to Dashboard → Manage Jenkins → System and Add like the below image.

Click on Apply and Save

The Configure System option is used in Jenkins to configure different server

Global Tool Configuration is used to configure different tools that we install using Plugins

We will install a sonar scanner in the tools.

Manage Jenkins –> Tools –> SonarQube Scanner

In the Sonarqube Dashboard add a quality gate also

Administration–> Configuration–>Webhooks

Click on Create

Add details

in url section of quality gate

http://jenkins-public-ip:8080/sonarqube-webhook/

To see the report, you can go to Sonarqube Server and go to Projects.

For docker tool

Go to manage Jenkins –> Credentials

Add DockerHub Username and Password under Global Credentials

Now In the Jenkins Instance

Give this command

aws eks update-kubeconfig --name <clustername> --region <region>

It will Generate an Kubernetes configuration file

Here is the path for config file

cd .kube cat config

copy the file that generates

Save it in your local file explorer, at your desired location with any name as text file.

f you Have any doubts refer the YouTube video

.