The Magical Connector for AI: Understanding Model Context Protocol (MCP)!

Have you ever wondered how smart AI assistants, like the ones that write stories or answer your questions, get information from all over the internet or even from your computer? It’s like they have a superpower! Today, we’re going to talk about something called the Model Context Protocol (MCP), which is like a secret key that helps AI assistants become even smarter and more helpful.

Part 1: Explaining MCP

Imagine you have many different toys, like a remote-control car, a robot, and a talking doll. Each toy needs its own special charger or connector to work. It’s like having a big box full of tangled wires, and you have to find the right one for each toy every time you want to play. What a mess!

Now, imagine if all your toys could use just one special connector – let’s call it the “Magic Universal Connector”. You could plug in your car, then unplug it and plug in your robot, all with the same easy-to-use cable! That would be awesome, right?

That’s exactly what MCP is for AI!

- Your AI assistant (like a smart robot brain) needs information (like “context”) to be helpful.

- This information can come from many places: like the internet (to search for things), or a special book (a database), or even other smart apps (like a weather app). These are like your AI’s “toys” or “tools”.

- Before MCP, every time your AI wanted to use a new “toy” or get information from a new place, someone had to build a special, complicated connection just for that one toy. It was like having a different charger for every single toy, making things messy and hard to manage.

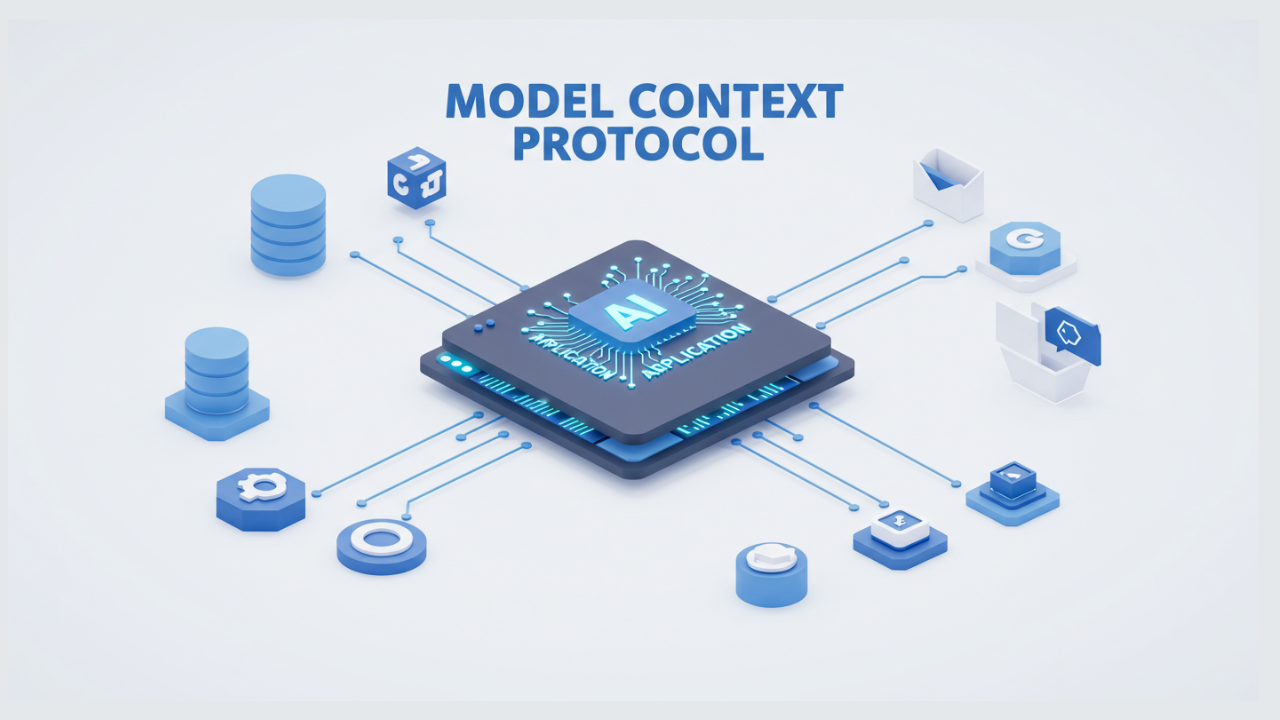

- MCP is the “Magic Universal Connector” for AI! It’s a special set of rules that all AI “toys” and AI “brains” agree to follow. This means that once they all use MCP, your AI assistant can easily connect to any tool or data source without needing a brand new, custom-made wire every single time. It’s like “plug-and-play” for AI!

So, in simple terms, MCP helps AI brains talk to all their tools and information sources using one easy, standard way, making AI super powerful and simple to build with.

Part 2: Detailed Explanation

The Model Context Protocol (MCP) represents a significant advancement in the integration of Large Language Models (LLMs) with external systems and data sources. Released as an open standard by Anthropic, it aims to standardize how applications provide context to LLMs.

What is MCP?

At its core, MCP is an open protocol that standardizes how applications provide context to LLMs. It acts as a universal connector for AI models, allowing them to interact seamlessly with diverse data sources, tools, and APIs. Think of it as the USB-C equivalent for AI applications, creating a plug-and-play system for various AI models (like GPT-4, Claude, Gemini) and their contextual needs.

Why is MCP Important? (The Problem it Solves)

Before MCP, the landscape of LLM integration faced several significant challenges:

- LLM Limitations: LLMs, on their own, are primarily predictive models based on their training data. They can “hallucinate” and are “dumb” without external context. To perform complex tasks (e.g., sending emails, searching real-time data), they need to interact with external tools.

- Integration Complexity: Connecting LLMs to external tools (like databases, web search, APIs, or local file systems) required custom, bespoke integration code for each connection. This was a “combinatorial explosion” – trying to connect ‘N’ AI applications to ‘M’ different tools resulted in ‘N x M’ custom integrations, leading to “absolute chaos to maintain”.

- Maintenance Nightmare: Whenever an external tool or service updated its API or functionality, the custom integration code also needed to be updated. This created a continuous burden on developers to manage and maintain large code repositories.

- Lack of Scalability: The custom integration approach made it incredibly difficult to scale AI assistants to interact with many tools and service providers.

MCP addresses this by providing a single, standardized protocol for AI systems to access context, replacing fragmented integrations.

How Does MCP Work? (Architecture and Communication Flow)

MCP operates on a client-server model with three primary components:

- MCP Host: This is your AI application or agent environment, such as Claude desktop, Cursor, Windsurf, or a custom AI application. It’s the “brain” that needs data or needs to perform an action, and it’s where the interaction with the server is implemented. The host creates MCP clients.

- MCP Client: Located within the MCP Host, the client is responsible for establishing and maintaining one-to-one connections with MCP Servers. It initiates requests using the MCP protocol.

- MCP Server: This is a lightweight helper program that knows how to communicate with a specific tool, service, or data source using the MCP standard. For example, an MCP server for GitHub knows how to talk to the GitHub API, or one for a local database knows how to speak SQL to it. Crucially, these servers, along with the tools they connect to, are managed by the service providers themselves. This means any updates to the tool are handled on the server side, without requiring changes to the client’s integration code.

The communication flow using MCP generally follows these steps:

- Input: A user provides an input or query to the AI application (MCP Host with its LLM).

- Tool Discovery: The MCP Host, via its MCP Client, queries available MCP Servers to discover what tools or services they expose. Servers return a detailed description of their capabilities, including tool names, descriptions, and required parameters, often in a standardized schema (e.g., JSON).

- LLM Tool Selection: The LLM receives the user’s question along with the descriptions of all available tools. Based on its language intelligence and the tool descriptions, the LLM determines which tool(s) are appropriate to use and extracts any necessary parameters from the user’s query.

- Tool Execution: The MCP Host, armed with the LLM’s decision, then calls the chosen tool through the corresponding MCP Server. The server interacts with the actual external tool (e.g., makes an HTTP call to an API).

- Context Return & Output: The MCP Server processes the tool’s response and provides the results (context) back to the LLM via the MCP Client and Host. The LLM then uses this new context to formulate a comprehensive and accurate final output to the user.

Core Primitives of MCP

MCP defines core “primitives” that enable this standardized communication:

- Server-Side Primitives:

Prompts: Instructions or templates that can be injected into the LLM’s context to guide its task approach. - Resources: Structured data objects (like files, databases, or documents) that can be included in the LLM’s context window. - Tools: Executable functions that the LLM can call to retrieve information or perform actions outside its core knowledge.

Client-Side Primitives:

- Root: Enables secure channel for file access, allowing the AI application to work safely with local files.

- Sampling: Allows a server to request the LLM’s help when needed (e.g., to generate a relevant database query based on a schema). This fosters a two-way interaction.

Benefits of Adopting MCP

The adoption of MCP offers several compelling advantages:

- Reduced Integration Code: Developers no longer need to write custom integration code for each external tool. This significantly simplifies the development process.

- Simplified Maintenance: Service providers manage their MCP servers, meaning any updates to the underlying tools are handled on the server side, without affecting the client’s existing integration.

- Enhanced Scalability: AI applications can easily connect to any number of tools, as all tools adhere to the common MCP standard.

- Empowered “VIP Coding”: MCP makes “Very Important Person (VIP) coding” (or simply, advanced prompting) more realistic. Users can tell the AI what they want, and the AI, via MCP, handles the underlying “grunt work” of connecting to and using various tools.

- Standardized Communication: By defining standard schemas for tool descriptions and input/output, MCP ensures a predictable and uniform way for AI systems to interact with external services.

Real-World Examples and Future Outlook

Developers are already creating numerous integrations using MCP for systems like Google Drive, Slack, GitHub, Git, PostgreSQL, Google Maps, Todoist, and even custom weather APIs. SDKs are available in multiple languages like TypeScript and Python, making implementation accessible.

While MCP is still in its early days, with some people super excited and others cautious, it holds significant potential. It’s positioned to become a foundational technology for building sophisticated AI applications that require interaction with diverse data sources and tools. The open-source nature and growing ecosystem are crucial for its widespread adoption.

How does MCP simplify AI integration?

The Model Context Protocol (MCP) significantly simplifies AI integration by addressing the challenges of connecting Large Language Models (LLMs) to a vast array of external tools and data sources. Before MCP, this integration landscape was characterized by complex, custom solutions, often referred to as an “N x M problem”.

Here’s how MCP simplifies AI integration:

- Eliminating Custom Integration Code Previously, integrating an AI assistant with external tools required writing separate, custom integration code for each specific tool. This meant that if your LLM needed to interact with 100 different tools, you would have to write and manage extensive, bespoke code for each one. MCP solves this by providing a “Magic Universal Connector” or a “USB-C port for AI application”, meaning that developers no longer need to write a single line of custom integration code for these connections. Instead, AI systems can “just connect it and… use it” for their purpose. This dramatically simplifies the development process.

- Simplifying Maintenance and Updates A major challenge before MCP was the maintenance nightmare. If an external tool or service updated its API or functionality, developers had to update their custom integration code as well. With MCP, the MCP servers and the tools they connect to are managed by the service providers themselves. This means that if any changes or updates happen to the underlying tools, the service providers handle them on their end, and your AI application’s integration code does not need to be updated. This makes systems less brittle and easier to manage.

- Enhancing Scalability The need for custom integration code made it difficult to scale AI assistants to interact with many tools and service providers. MCP provides a common medium or language that all tool providers adhere to, allowing LLMs to connect with “any number of tools”. This uniform approach makes it far easier to scale AI applications to work with a diverse and growing ecosystem of services.

- Standardizing Communication MCP acts as a standardized rule book, defining how AI models ask for and receive context and information from external sources. It outlines a predictable and uniform way of communication through standardized schemas for tool descriptions, input, and output. This common protocol means that whether an AI needs code from a repository, data from a database, or information from Slack, the interaction follows a consistent set of rules.

- Empowering “VIP Coding” MCP makes advanced prompting, often referred to as “VIP coding,” more powerful and realistic. Users can instruct the AI in plain English, and the AI, through MCP, handles “all of the grunt work” of connecting to and using various tools. This means that orchestrating workflows across different services, like GitHub, Slack, and Stripe, becomes about focusing on “the what not the how of the connections”.

In essence, MCP provides a universal open standard for connecting AI systems with data sources, effectively replacing fragmented integrations with a single, coherent protocol. This is demonstrated by existing integrations with systems like Google Drive, Slack, GitHub, Git, and PostgreSQL.

Comments (0)

No comments yet. Be the first to share your thoughts.

Leave a comment